When I set out to build Attachment Architect, the main obstacle was clear: how do you scan a Jira instance with over 100,000 issues on a serverless platform designed with timeout constraints? The standard Atlassian Forge

When I set out to build Attachment Architect, the main obstacle was clear: how do you scan a Jira instance with over 100,000 issues on a serverless platform designed with timeout constraints? The standard Atlassian Forge scheduledTrigger runs only every five minutes. A rough calculation indicated it would take nearly two days to completely scan a large instance. This wasn’t a product; it was a science experiment. This is the story of how I abandoned the standard method, navigated Forge’s restrictions, and built a hybrid scanning engine that is more than 20 times faster, turning a two-day marathon into an 2-hour sprint.

The Initial Failure: The Naive scheduledTrigger Approach

All Forge developers start at this point. My initial prototype used a basic scheduled trigger that would run every 5 minutes, process a tiny batch of issues, and save its state. While it was dependable, it was unimaginably slow.

+----------------------+

| Trigger 1 (10:00 AM) | --- Processes Batch 1 ---> Sleeps for 5 mins

+----------------------+

+----------------------+

| Trigger 2 (10:05 AM) | --- Processes Batch 2 ---> Sleeps for 5 mins

+----------------------+

+----------------------+

| Trigger 3 (10:10 AM) | --- Processes Batch 3 ---> ...and so on.

+----------------------+

The result: an issue processing rate of just ~40 issues/minute. The complete scan of 100,000 issues was expected to take about 42 hours.

I knew this was unacceptable. The user experience would be terrible. Nobody is going to wait two days for their initial results. I had to find a method to make the process continuous rather than intermittent.

The Breakthrough: The Self-Invoking Webhook Pattern

Then, an amazing thing happened. I stopped depending on Forge to “wake up” my app every 5 minutes and instead, made my app “wake itself up” right after each batch.

Here is the process:

- The user clicks “Start Scan.”

- This action calls a Forge resolver that processes the first batch.

- When Batch 1 is fully processed, instead of stopping, it makes a

fetchcall to its ownwebTriggerURL, in a way saying to itself: “I am finished, process the next batch right now.” - The

webTriggerthen takes care of Batch 2, and when it’s done, it calls its own API once again. - This results in a fast, uninterrupted processing chain that is only limited by the Jira API, effectively ignoring the 5-minute

scheduledTriggerrestriction.

The implementation of this technique resulted in a reduction in the scanning time for 100,000 issues from 42 hours to just over 2 hours.

[User Clicks 'Start Scan']

|

v

+--------------------------------+

| Resolver (Processes Batch 1) | --[fetch]--> (Calls its own Web Trigger)

+--------------------------------+ ^

| |

(Updates UI) |

|

+--------------------------------+ |

| Web Trigger (Processes Batch 2)| --[fetch]--> (Calls itself again)

+--------------------------------+ ^

| |

(Saves state) |

|

+--------------------------------+ |

| Web Trigger (Processes Batch 3)| --[fetch]---- ... and so on

+--------------------------------+

Adding a Safety Net: The Hybrid Engine

But what if the user closes their browser? The “active” webhook chain might halt. At this point, I devised a hybrid system that would provide the best of both worlds.

But what if the user closes their browser? The “active” webhook chain might halt. At this point, I devised a hybrid system that would provide the best of both worlds.

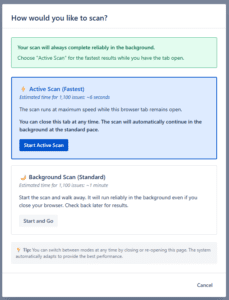

The idea was to use the ultra-fast webhook method when the user was actively watching, but to have the reliable scheduledTrigger act as a “watchdog” that would seamlessly take over if the active process stopped for any reason.

How it Works (Simplified):

- Frontend Active Mode: While the dashboard is open, it pings a backend function every second, which through the webhook chain triggers the next batch.

- Scheduled Trigger “Watchdog”: The old 5-minute trigger still runs. Its first task is to check a timestamp: “When was the last batch processed?”. If it was less than 2 minutes ago, it means the active mode is running, so the watchdog does nothing. If it was more than 2 minutes ago, it means the active process has stopped, and the watchdog automatically takes over, processing batches in the slow but guaranteed background mode.

Conclusion: Lessons I Learned for Forge Developers

This journey taught me some very important lessons about building high-performance, enterprise-grade apps on Forge:

- Don’t Fear the Limits, Embrace Them: I understood that the limitations of Forge (like the 25-second function timeout and 5-minute triggers) are not obstacles; they are boundaries that compel you to write better, more robust, and more scalable serverless code.

- Build a Resilient, Hybrid System: The combination of real-time and scheduled processes gives you the advantage of both speed and reliability.

By sharing my technical journey, I hope to help other developers in the Atlassian ecosystem build even more amazing things. And if you wish to see this engine working, feel free to go to the Marketplace and check out Attachment Architect.

See Attachment Architect on the Atlassian Marketplace

Beyond the Scanner: What’s Next for Attachment Architect?

Building a high-performance scanning engine was just the first, critical step. The true vision for Attachment Architect is to create a complete, intelligent governance platform. Here’s a sneak peek at what I’m building next:

- Custom Scan Scopes: Soon, you’ll be able to run scans not just on your entire instance, but on a specific project or even based on a custom JQL query. This will allow for incredibly fast and highly-targeted audits.

- Automated Governance Policies: The next major leap is moving from analysis to action. I’m building a powerful policy engine that will let you create “set-it-and-forget-it” rules to automatically manage new duplicates and enforce data retention policies.

- Deep Content Security Scanning: The ultimate goal is to help you find hidden risks. The upcoming security module will scan the content of your files for potential secrets, like exposed API keys or Personal Identifiable Information (PII), transforming your attachment library from a blind spot into a governable asset.